Roboflow 100-VL

A Multi-Domain Object Detection Benchmark for Vision-Language Models

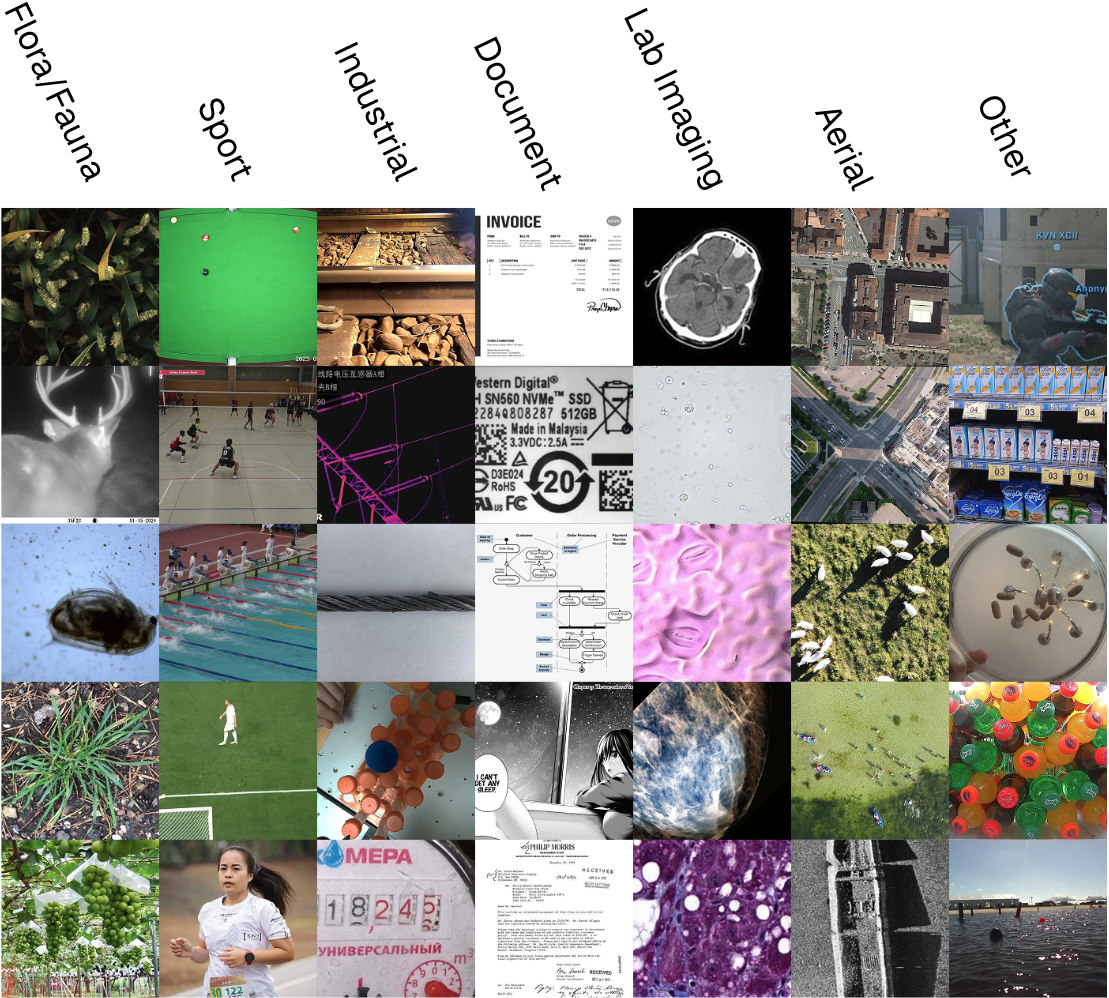

Roboflow 100 Vision Language (RF100-VL) is the first benchmark to ask, “How well does your VLM understand the real world?” In pursuit of this question, RF100-VL introduces 100 open source datasets containing object detection bounding boxes and multimodal few shot instruction with visual examples and rich textual descriptions across novel image domains. The dataset is comprised of 164,149 images and 1,355,491, annotations across seven domains, including aerial, biological, and industrial imagery. 1693 labeling hours were spent labeling, reviewing, and preparing the dataset.

RF100-VL is a curated sample from Roboflow Universe, a repository of over 500,000+ datasets that collectively demonstrate how computer vision is being leveraged in production problems today. Current state-of-the-art models trained on web-scale data like QwenVL2.5 and GroundingDINO achieve as low as 2% AP in some categories represented in RF100-VL.

Abstract

Vision-language models (VLMs) trained on internet-scale data achieve remarkable zero-shot detection performance on common objects like car, truck, and pedestrian. However, state-of-the-art models still struggle to generalize to out-of-distribution tasks (e.g. material property estimation, defect detection, and contextual action recognition) and imaging modalities (e.g. X-rays, thermal-spectrum data, and aerial images) not typically found in their pre-training. Rather than simply re-training VLMs on more visual data (the dominant paradigm for few-shot learning), we argue that one should align VLMs to new concepts with annotation instructions containing a few visual examples and rich textual descriptions. To this end, we introduce Roboflow100-VL, a large-scale collection of 100 multi-modal datasets with diverse concepts not commonly found in VLM pre-training. Notably, state-of-the-art models like GroundingDINO and Qwen2.5-VL achieve less than 2% zero-shot accuracy on challenging medical imaging datasets within Roboflow100-VL, demonstrating the need for few-shot concept alignment.

Explore the Dataset

You can explore the RF100-VL dataset with our interactive, CLIP-based vector chart below.

The chart illustrates the discrete clusters of data that comprise the RF100-VL dataset.

Contributions

RF100-VL introduces a novel evaluation for assessing the efficacy of vision language models (VLMs) and traditional object detectors in real world settings. As models become increasingly capable, they need to be compared to real world, difficult, and practical scenarios. The Roboflow open source community is increasingly representative of how computer vision is truly being used in real world settings, spanning over 500M user labeled and shared images. RF100-VL is the culmination of aggregating the best high quality open source examples (including those cited in Nature) from a wide array of domains, then relabeling and verifying annotation quality. It supports few shot object detection from image prompts, annotator instructions, and human readable class names. RF100-VL builds on RF100, a benchmark Roboflow introduced at CVPR 2023 that labs from companies like Apple, Baidu, and Microsoft leverage to benchmark vision model capabilities.

CVPR 2025 Workshop Challenge: Few-Shot Object Detection from Annotator Instructions

Organized by: Anish Madan, Neehar Peri, Deva Ramanan

Introduction

This challenge focuses on few-shot object detection (FSOD) with 10 examples of each class provided by a human annotator. Existing FSOD benchmarks repurpose well-established datasets like COCO by partitioning categories into base and novel classes for pre-training and fine-tuning respectively. However, these benchmarks do not reflect how FSOD is deployed in practice.

Rather than pre-training on only a small number of base categories, we argue that it is more practical to download a foundational model (e.g., a vision-language model (VLM) pretrained on web-scale data) and fine-tune it for specific applications. We propose a new FSOD benchmark protocol that evaluates detectors pre-trained on any external dataset (not including the target dataset), and fine-tuned on K-shot annotations per C target classes.

We evaluate a subset of 20 datasets from Roboflow-VL. Each dataset is independently evaluated using AP. Roboflow-VL includes datasets that are out-of-distribution from typical internet-scale pre-training data, making it a particularly challenging (even for VLMs) for Foundational FSOD.

Benchmarking Protocols

Goal: Developing robust object detectors using few annotations provided by annotator instructions. The detector should detect object instances of interest in real-world testing images.

Environment for model development:

- Pretraining: Models are allowed to pre-train on any existing datasets.

- Fine-Tuning: Models can fine-tune on 10 shots from each of RF20-VL-FSOD's datasets.

- Evaluation: Models are evaluated on RF20-VL-FSOD's test set. Each dataset is evaluated independently.

Evaluation metrics:

- AP: The average precision of IoU thresholds from 0.5 to 0.95 with the step size 0.05.

Submission Details

Submit a zip file with pickle files for each dataset. The name of each pickle file should match the name of each dataset. Each pickle file should use the following COCO format.

[

"image_id": int,

"instances":

[{ "image_id": int,

"category_id": int,

"bbox": [x,y,width,height],

"score": float

},

{"image_id": int,

"category_id": int,

"bbox": [x,y,width,height],

"score": float }, ... ,],

...,

]

We've provided a sample submission for your reference. Submissions should be uploaded to our EvalAI Server.

Official Baseline

We pre-train Detic on ImageNet21-K, COCO Captions, and LVIS. We evaluate this pre-trained model zero-shot on the datasets in RF20-VL. Our baseline code is available here.

Timeline

- Submission opens: March 15th, 2025

- Submission closes: June 8th, 2025, 11:59 pm Pacific Time

- The top 3 participants on the leaderboard will be invited to give a talk at the workshop

References

- Madan et. al. "Revisiting Few-Shot Object Detection with Vision-Language Models." Proceedings of the Conference on Neural Information Processing Systems. 2024

- Zhou et. al. "Detecting Twenty-Thousand Classes Using Image-Level Supervision". Proceedings of the IEEE European Conference on Computer Vision. 2022

Citation

You can cite RF100-VL in your research using the following citation:

@article{robicheaux2025roboflow100vl,

title={Roboflow100-VL: A multi-domain object detection benchmark for vision-language models},

author={Robicheaux, Peter and Popov, Matvei and Madan, Anish and Robinson, Isaac and Nelson, Joseph and Ramanan, Deva and Peri, Neehar},

journal={Advances in Neural Information Processing Systems},

year={2025}

}